Semantic Kernel: Empower

Your Large Language Model

Applications

5 min read

Jun 24, 2024

Semantic Kernel

LLM

Semantic Kernel is an innovative and lightweight software development kit that facilitates the integration of Large Language Models with conventional programming languages.

What is Semantic Kernel?

Semantic Kernel is an innovative and lightweight software development kit (SDK) that facilitates the integration of AI Large Language Models (LLMs) with conventional programming languages.

The Semantic Kernel extensible programming model combines natural language semantic functions with traditional code native functions, and embeddings-based memory. This powerful combination unlocks new potential and adds value to applications with AI.

The concept of Semantic Kernel has emerged from the need to combine the capabilities of LLMs with conventional programming languages, without compromising the expressiveness of either. The SK SDK provides developers with a set of tools that allows them to leverage the capabilities of LLMs in their applications, while still being able to write traditional code.

Why do you need Semantic Kernel?

The Semantic Kernel platform is the perfect solution for developers looking to integrate AI into their existing applications. With its flexible architecture, SK makes it easy for developers to add new AI features without worrying about managing the underlying infrastructure. By leveraging the power of SK, developers can focus on building out their business logic and creating new features, while the platform takes care of the rest. Whether you’re looking to add natural language processing, computer vision, or other cutting-edge AI capabilities to your application, SK has you covered. It is open-source.

Key features of Semantic Kernel

The key features of Semantic Kernel include its extensibility, which allows developers to add new natural language semantic functions to the system; its embeddings-based memory, which enables the system to remember context and relationships between concepts; and its support for chaining, which allows multiple semantic functions to be combined in a single operation.

Core features:

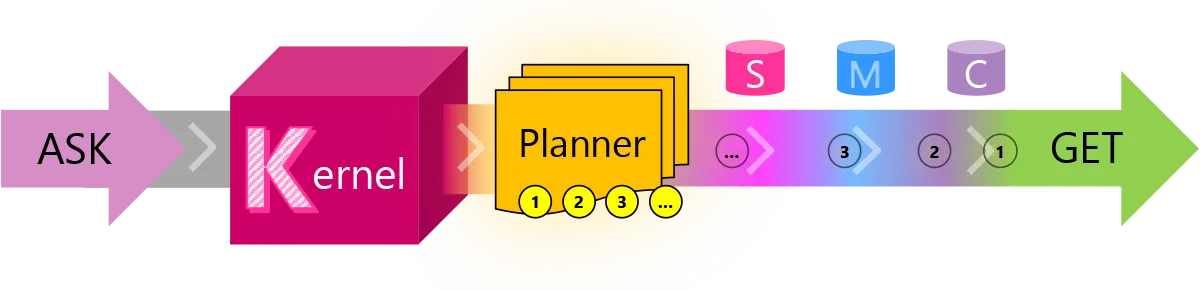

- Planner: automatically generates and execute complex tasks based on a user’s goals.

- Skills/Plugins: custom components that can be reused across different apps.

- Memory: store context and embeddings in memory or other storage.

- Connectors: out-of-the-box connectors for data sources (like Microsoft Graph).

- Custom functions: custom logic.

- Chaining: chaining requests to build a pipeline.

Semantic Kernel supports C# and Python.

Use cases

Semantic Kernel has a lot of use cases and scenarios, like building complex user flows and pipelines powered with LLMs.

Common use cases:

- chatbot allowing the organization to query their own data;

- summarization of the documents;

- customer service automation.

The most powerful Semantic Kernel alternative is LangChain.

It has many more integrations and plugins for now. But if you focus on .NET area — Semantic Kernel is a great solution for you to build LLM applications.

Sample

Let’s build an app that allows to ask questions to our PDF files. We will use a popular test sample for querying PDF files — bitcoin.pdf.

Initialize Semantic Kernel:

var configBuilder = new ConfigurationBuilder()

.AddJsonFile("appsettings.json", optional: true, reloadOnChange: true)

.AddJsonFile("appsettings.development.json", optional: true, reloadOnChange: true)

.Build();

// Initialize Semantic Kernel

var embeddingOptions = configBuilder.GetSection("Embedding").Get<AzureOpenAIOptions>();

var completionOptions = configBuilder.GetSection("Completion").Get<AzureOpenAIOptions>();

var kernel = Kernel.CreateBuilder()

.AddAzureOpenAIChatCompletion(completionOptions.DeploymentId, completionOptions.Endpoint, completionOptions.Key)

.Build();

var memoryBuilder = new MemoryBuilder();

memoryBuilder.WithAzureOpenAITextEmbeddingGeneration(embeddingOptions.DeploymentId, embeddingOptions.Endpoint,

embeddingOptions.Key);

memoryBuilder.WithMemoryStore(new VolatileMemoryStore());

var memory = memoryBuilder.Build();

Parse PDF files and initialize Semantic Kernel memory:

// Parse PDF files and initialize Semantic Kernel memory

var pdfFiles = Directory.GetFiles(Directory.GetCurrentDirectory(), "*.pdf");

foreach (var pdfFileName in pdfFiles)

{

using var pdfDocument = UglyToad.PdfPig.PdfDocument.Open(pdfFileName);

foreach (var pdfPage in pdfDocument.GetPages())

{

var pageText = ContentOrderTextExtractor.GetText(pdfPage);

var paragraphs = new List();

if (pageText.Length > MAX_CONTENT_ITEM_SIZE)

{

var lines = TextChunker.SplitPlainTextLines(pageText, MAX_CONTENT_ITEM_SIZE);

paragraphs = TextChunker.SplitPlainTextParagraphs(lines, MAX_CONTENT_ITEM_SIZE);

}

else

{

paragraphs.Add(pageText);

}

foreach (var paragraph in paragraphs)

{

var id = pdfFileName + pdfPage.Number + paragraphs.IndexOf(paragraph);

await memory.SaveInformationAsync(memoryCollectionName, paragraph, id);

}

}

}

kernel.ImportPluginFromObject(new TextMemoryPlugin(memory), nameof(TextMemoryPlugin));

// Ask Semantic Kernel

var query = "How is the work by \"R.C. Merkle\" used in this paper?";

var promptTemplate = await File.ReadAllTextAsync("prompt.txt");

kernel.CreateFunctionFromPrompt(promptTemplate, functionName: "QuerySkill");

Ask a question:

Prompt:

{{textmemoryskill.recall $input}}

You are an intelligent assistant helping Contoso Inc employee.

Use ‘you’ to refer to the individual asking the questions even if they ask with "I".

Answer the following question using only the data provided in the sources below.

If you cannot answer using the sources below, say you don’t know.

Question: {{$input}}var query = "How is the work by \"R.C. Merkle\" used in this paper?";

var promptTemplate = await File.ReadAllTextAsync("prompt.txt");

kernel.CreateFunctionFromPrompt(promptTemplate, functionName: "QuerySkill");

var result = await kernel.InvokeAsync(kernel.Plugins.GetFunction(nameof(TextMemoryPlugin), "QuerySkill"), new KernelArguments { { "input", query } });

Console.WriteLine(result.GetValue());

Response:

The work by R.C. Merkle is referenced in the paper as "Protocols for public key cryptosystems" and is mentioned as a source in the list of references. However, it is not specified how it is used in the paper.

Potential improvements:

- store Semantic Kernel memory in Vector Database;

- improve document parsing to handle complex structures (tables, images, etc.) with services like Azure AI Document Intelligence.

You can find the full source code on GitHub.

Ready to get started?

Let's discuss how AI can transform your business today